Background and Goal

The objective of this lab is to gain experience with the

processing of hyperspectral remotely sensed data. Because hyperspectral images

have many bands with specific wavelengths, it is common for several of the

bands to be corrupt or incorrect from atmospheric influences as well as sensor

error. It is necessary to detect these noisy bands and remove them from the

processing. We then learned how to detect target features from the

hyperspectral image.

Methods

For this lab we used Airborne Visible/Infrared Imaging

Spectrometer (AVIRIS) data of a geologic field site in Nevada. The image

consisted of 255 bands. Anomaly detection was used to compare results between

an image that was not preprocessed and an image that had bad bands removed. Anomaly

detection identifies pixels in the image that have a significant deviation of

spectral signature compared to the rest of the image. First, we did an anomaly

detection of the AVIRIS image without removing the bands. Then, we used the Bad

Band Selection Tool to identify the bad bands within the image. Figure 1 shows

this tool, with the bad bands highlighted. A total of 20 bands were removed.

The two outputs of the anomaly detection function were then compared for

differences.

|

| Figure 1: The bad band selection tool, with the bad bands highlighted in red. |

Next, we explored the use of target detection. This process

creates a mask that highlights instances of a given spectral frequency (the

target). For this lab, the target was the mineral Buddingtonite. First, we used

a simple target detection method that used a spectral signature that was

collected from a classified image. This would create a mask in much the same

way the anomaly detection did, highlighting areas with the same spectral

signature. Next, we used a spectral signature from the USGS spectral signature

library and analyzed the two outputs for differences.

Results

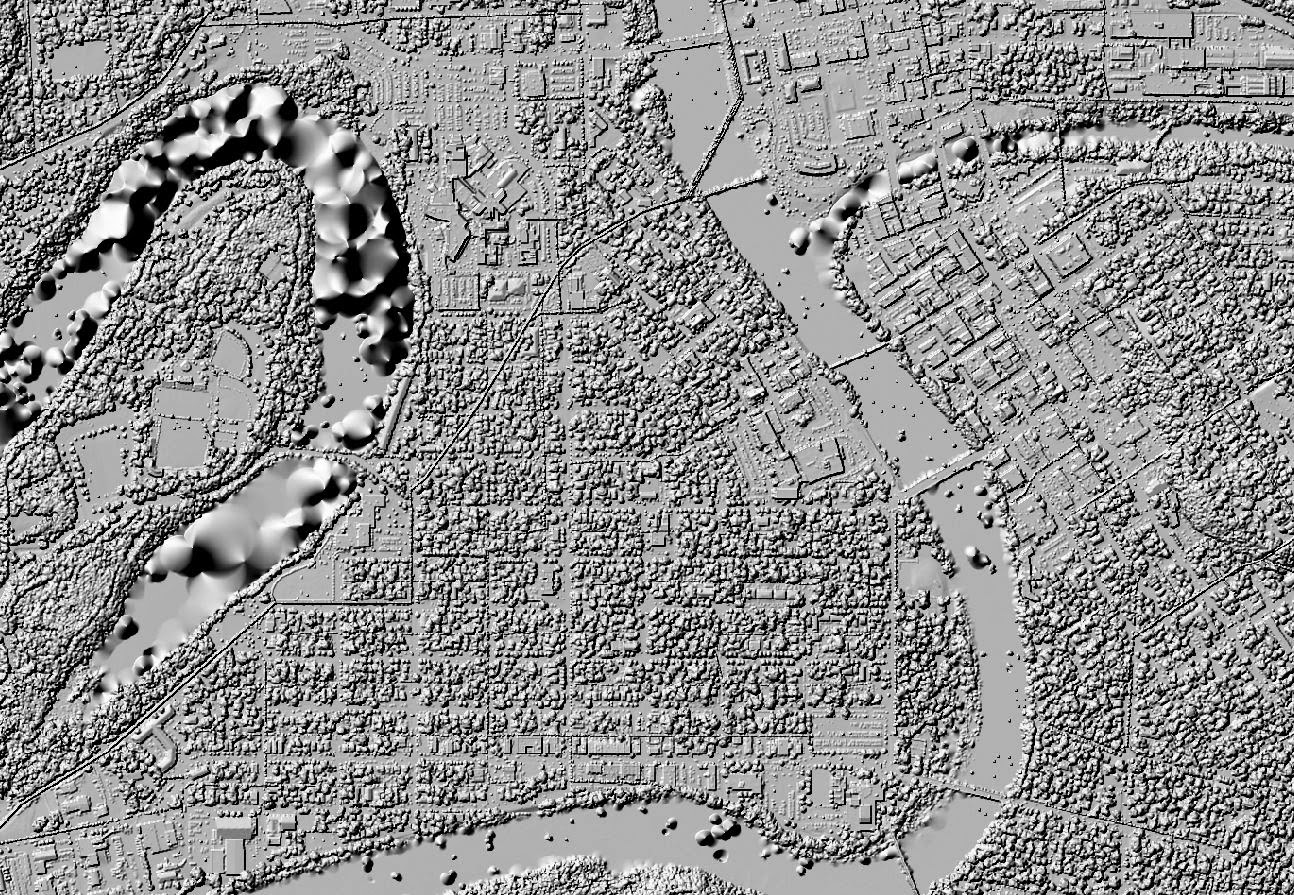

Figures 2 and 3 show the anomaly masks for the image without

bands removed and the image with bad bands removed, respectively. There isn’t

too much of a difference just by looking at them, but by using the swipe tool

in the spectral analysis workstation, a difference was determined. After

removing the bands, the anomalies were highlighted in the same area, however

they were a little larger.

|

| Figure 2: The anomaly mask of the image without the bad bands removed. |

|

| Figure 3: The anomaly mask of the image with the bad bands removed. The anomalies are in the same spot, though they are slightly larger. |

Figure 4 shows the results of the target detection overlay.

There was an error with the simple target detection which just created a grey

box for the target mask. The target detection with the USGS Spectral library

signature worked just fine though. I’m guessing that the results of the simple

target detection would show more spread and peppering, as the spectral

signature was based off of a classification image.

Sources

Erdas Imagine.

(2010). [AVIRIS hyperspectral image]. Hexagon

Geospatial. Obtained from Erdas Imagine 2010.